AI Detection vs Provenance: Why Probability Scores Are Failing in 2026

AI detection is failing in 2026. Learn why probability scores don’t prove authorship, how provenance works, and what replaces AI detectors going forward.

For the entire 2025, AI detection has worked like a courtroom drama.

Paste text in, wait for a score, and hope it says “0% AI.”

In 2026, that model is breaking.

This is because probability scores alone can’t keep up with how content is actually created anymore.

The core problem with AI detection in 2026

AI detectors answer one question:

“Does this text statistically resemble AI output?”

They do not answer:

- Who wrote it

- How it was written

- Whether AI was used as a tool, assistant, or editor

That distinction mattered less when people were copy-pasting raw ChatGPT outputs.

It matters a lot now. The reason is most modern writing looks like this:

- Human intent

- AI for ideation or restructuring

- Human edits

- AI polish

- Final human judgment

Detectors see only the final pattern, not the creative process. That’s where false positives and misplaced trust come from.

Example 1: GPTZero and probabilistic uncertainty

GPTZero is widely used in education and publishing. It does a solid job identifying raw AI output.

But with edited or AI-assisted writing, GPTZero often returns:

- “Uncertain”

- “Mixed authorship”

- Highlighted sentences without clear conclusions

This is an honest limitation, not a flaw. GPTZero is doing probability math on surface-level signals:

- sentence predictability

- structural smoothness

- word frequency

When humans edit AI or write very cleanly themselves, those signals overlap.

The result: probability without proof.

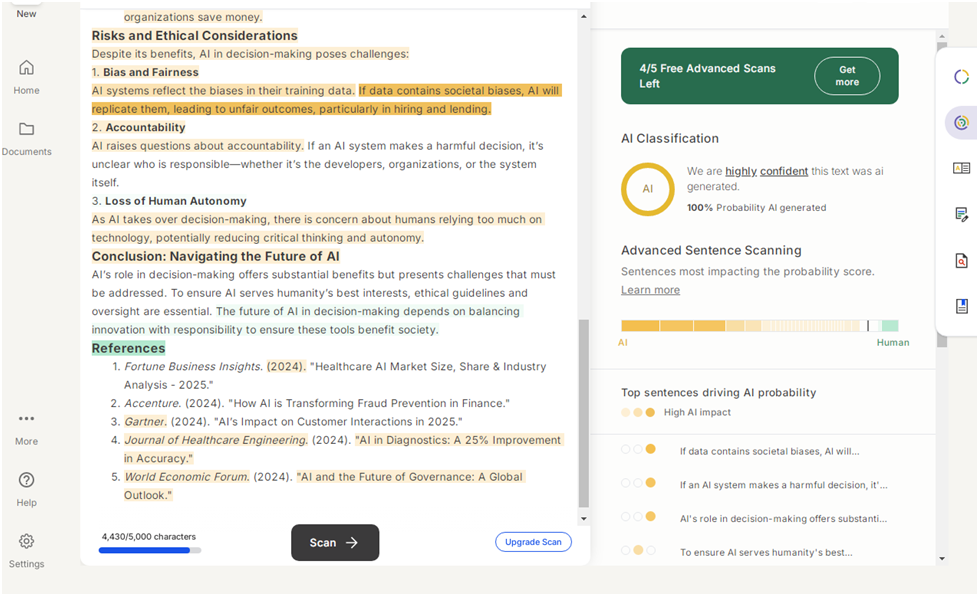

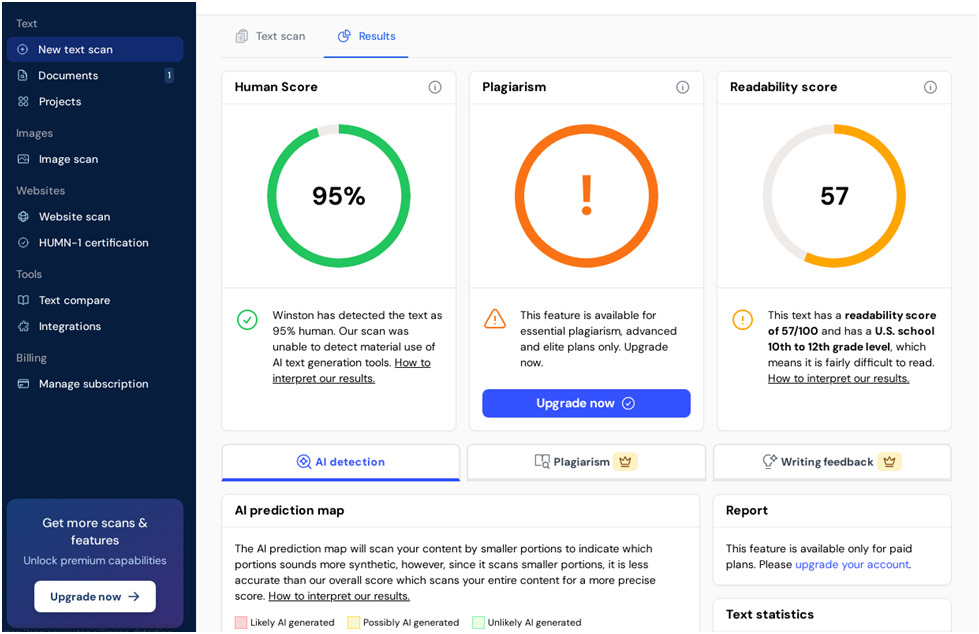

Example 2: Winston AIand the limits of confidence scores

Winston AI positions itself strongly around accuracy and reporting, and it’s one of the better tools for institutional workflows.

But even Winston AI's scores are still estimates, not evidence.

A “95% human” score doesn’t tell you:

- what tools were used

- how many edits happened

- whether AI assisted at any stage

That’s why Winston AI pairs detection with:

- reports

- comparisons

- certification layers

Even Winston AI implicitly acknowledges that detection alone isn’t enough.

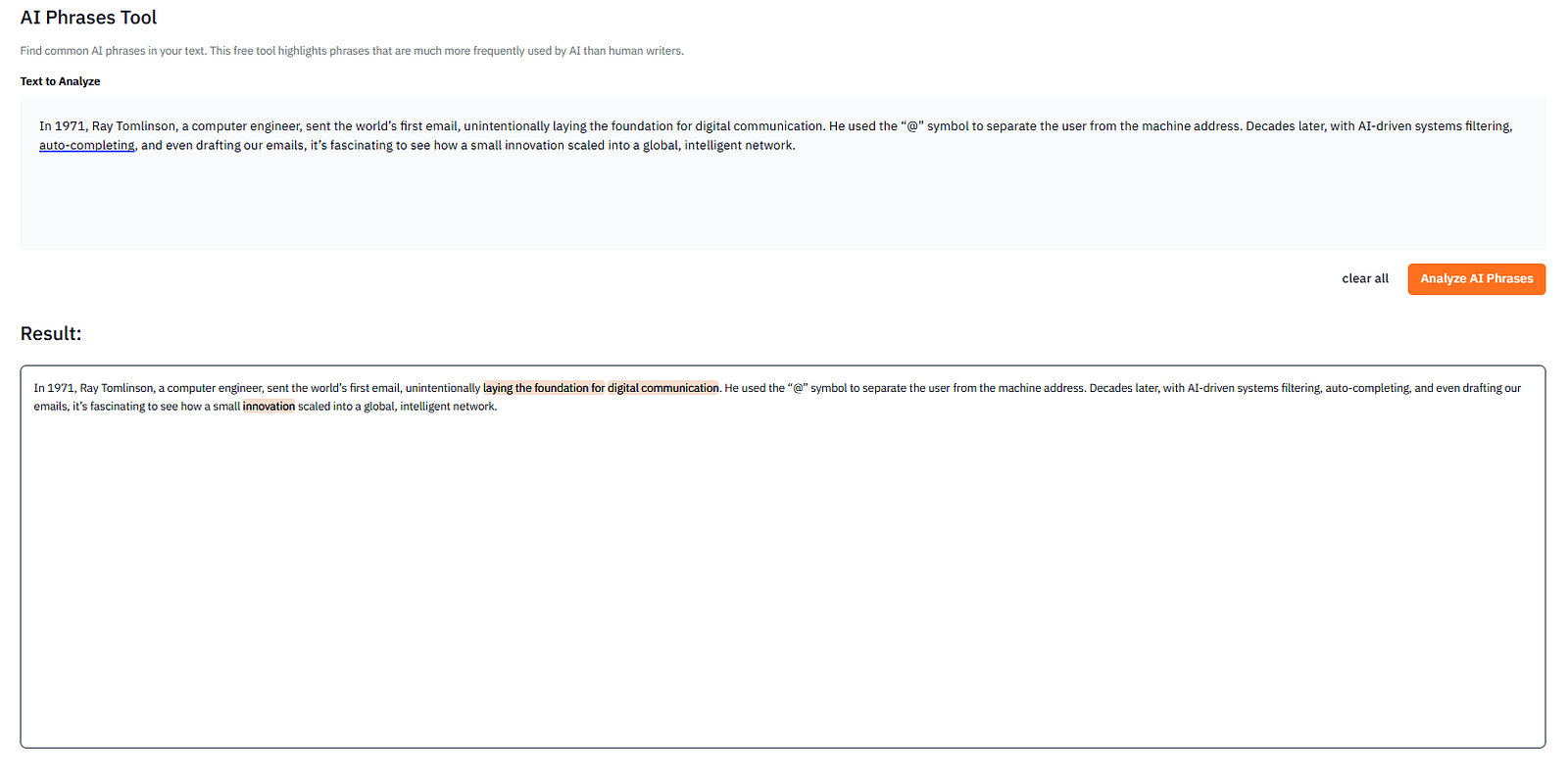

Example 3: Pangram Labs and the shift toward “AI-assisted”

Pangram Labs is a good example of where the industry is heading.

Instead of pretending everything is binary (AI vs human), Pangram Labs explicitly recognizes AI-assisted as a real category.

That’s important.

Because in 2026, AI-assisted is the new norm.

Why probability scores fail in high-stakes situations

Probability scores fall apart when used as proof in:

- hiring decisions

- academic discipline

- content takedowns

- publishing disputes

This is because probability ≠ provenance. A detector saying “likely AI” is not evidence of:

- misconduct

- plagiarism

- automation abuse

It’s simply a statistical guess. And guesses shouldn’t carry consequences.

What provenance does better than detection

Provenance answers a different, and more useful, question:

“How was this content made?”

Instead of inferring authorship from patterns, provenance records:

- where content originated

- which tools were used

- how it changed over time

This is why open standards like C2PA/Content Credentials are gaining momentum, and why the Content Authenticity Initiative is pushing ecosystem-level adoption.

Detection looks at output, but provenance documents process.

The future: detectors as signals, provenance as proof

AI detectors aren’t going away, but their role is changing. In 2026:

- Detectors are early warning signals

- Human review provides judgment

- Provenance provides verification

Any system that relies on probability scores alone will continue to:

- mislabel clean human writing

- miss well-edited AI content

- punish good writers for clarity

The future isn’t better guessing, but better receipts.

Final takeaway

If your entire authenticity strategy depends on a percentage score, it’s already outdated.

AI detection helped us through the first wave of generative content. Provenance is how we move forward without breaking trust.

And in 2026, trust, not detection, is the real problem to solve.

Further reading

I’ve tested and compared 30+ AI detectors in 2026, breaking down false positives, real-world accuracy, and where each tool works (and fails).

Affiliate disclosure

The links in this article are affiliate links. If you choose to purchase through them, I may earn a small commission at no extra cost to you.

That said, every review, test, and comparison in this piece is based on my own hands-on use of the tools discussed. I test these products with real writing examples, note both strengths and limitations, and include tools only if they add genuine value. Compensation never influences rankings, recommendations, or conclusions.

I believe transparency matters — especially when writing about AI tools — and I’ll always prioritize accuracy and honesty over affiliate incentives.