AI Humanizers vs Human Editing: What Actually Improves Content Quality

AI humanizers are often sold as a shortcut: paste AI-generated text, click a button, and get something that magically sounds human.

After testing 30+ tools over the past year, I’ve learned this the hard way:

Humanizers can improve writing.

Human editing determines whether it’s actually good.

This article breaks down where AI humanizers genuinely help, where they hurt content quality, and why human judgment still does most of the heavy lifting in 2026.

What AI humanizers are genuinely good at

Used correctly, AI humanizers can reduce friction, not replace thinking.

1. Cleaning up robotic sentence patterns

Raw AI drafts tend to rely on:

- Predictable transitions (“Moreover,” “In conclusion,” “It is important to note…”)

- Uniform sentence length

- Overly neutral tone

In my tests, tools like GPTHuman.ai handled this well in long-form blog sections. When I ran 800–1,200 word SEO-style drafts through its Balanced and Enhanced modes, the output showed better rhythm and fewer obvious LLM tells.

But the text still needed a final human pass to sound like me, not like “well-edited AI.”

Takeaway: Humanizers are good at smoothing patterns. They’re not good at restoring personality.

2. Improving clarity without rewriting ideas

Some tools behave more like editors than transformers.

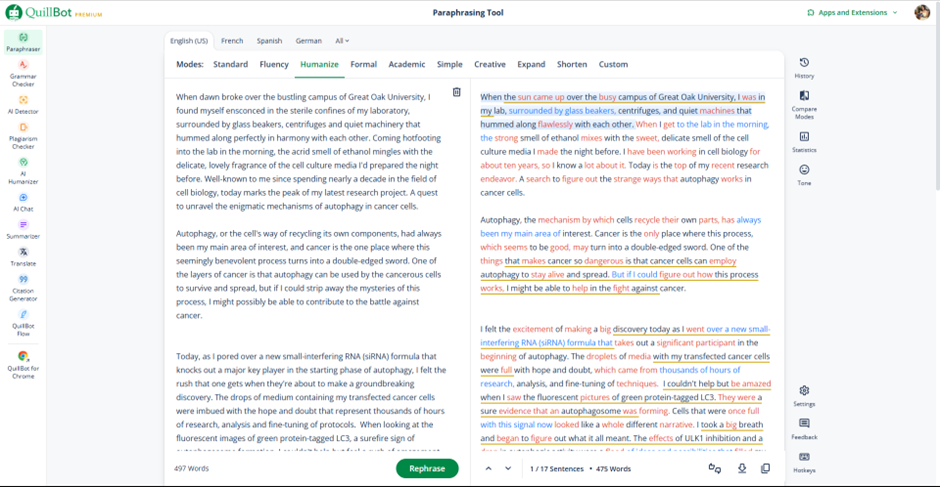

Quillbot is a good example. In my original testing, Quillbot didn’t attempt to overhaul paragraphs. Instead, it:

- Tightened phrasing

- Reduced repetition

- Improved flow

Most importantly, it preserved meaning, which is rare and valuable.

That makes it useful after you’ve already shaped your argument, not before.

Takeaway: Tools that edit lightly often outperform tools that rewrite aggressively.

3. Meaning drift in longer content

As input length increases, so does risk.

In all-in-one academic platforms like EssayDone.ai, the workflow is convenient: generate → humanize → check. But in longer explanatory passages, I noticed something subtle but dangerous: emphasis shifted.

The argument wasn’t wrong, but it wasn’t quite the same either.

For SEO, education, or thought leadership, this kind of drift matters. Search engines reward consistency and clarity. Readers notice when ideas feel “off,” even if they can’t articulate why.

Takeaway: The longer the input, the more human oversight you need.

4. Readability trade-offs at the budget tier

Budget-friendly tools can still be effective — with caveats.

When testing WriteHuman AI, sentence variation improved and obvious AI phrasing reduced. However, readability occasionally dipped. Some sentences became longer or more complex than necessary.

Nothing catastrophic, but enough that a quick read-aloud was required to fix flow.

Takeaway: Affordable tools save time, not effort. You still need to read and edit.

What human editing does that tools still can’t

This is where humans win consistently.

Humans add:

- Intent (why this matters now)

- Specificity (examples, constraints, trade-offs)

- Opinion (what you’d actually recommend)

- Accountability (standing by claims)

No humanizer I’ve tested reliably adds:

- Lived experience

- Original judgment

- Ethical boundaries

- Contextual restraint

Those elements are exactly what modern search systems and real readers reward.

The workflow that actually improves quality (2026)

After dozens of tests, this is the only process I trust:

- Outline and draft (AI-assisted is fine)

- Humanize lightly (to fix cadence and repetition)

- Human edit for meaning, voice, and intent

- Publish only what you can defend

Anything that skips step 3 produces content that may pass tools but fails readers.

The real conclusion

AI humanizers are not shortcuts to quality. They’re accelerators; and accelerators amplify both good and bad writing.

Used thoughtfully, they save time and reduce friction. Used blindly, they flatten voice, blur meaning, and weaken trust.

That’s why, in my full 2026 comparison of AI humanizers, I rank tools based not on promises or scores, but on how well they support human judgment, not replace it.

If a paragraph doesn’t sound like something I’d say out loud, no tool gets the final word.

Affiliate disclosure: This article contains affiliate links. If you purchase a subscription through these links, I may earn a small commission at no extra cost to you. Thank you for supporting my work!