How I Test AI Writing Tools for SEO (My 2026 Framework)

AI writing tools are everywhere in 2026. But most comparisons miss the only question that actually matters:

Does this tool help content rank, or just get written faster?

After testing multiple AI writing tools across SEO-heavy projects, I stopped judging them by output quality alone. Instead, I built a framework focused on publishability, intent alignment, and real ranking potential.

This is the exact process I now use to evaluate AI writing tools for SEO, and why it filters out hype very quickly.

Step 1: I Test Different Types of SEO Content (Not Just Blog Posts)

AI tools behave very differently depending on the content format.

For every tool, I test it on at least three SEO-relevant formats:

- A how-to guide (informational intent)

- A comparison or roundup (commercial intent)

- A research- or insight-led article (authority intent)

If a tool performs well only on generic blog posts but breaks down elsewhere, it’s not SEO-reliable.

This is where many tools quietly fail.

Step 2: I Lock the Constraints Before Writing Anything

To keep results comparable, I fix the constraints before generating text:

- Word count: 1,200–1,800 words

- Tone: Conversational, neutral, non-salesy

- Target keyword: 1 primary + supporting variations

- Search intent: Defined upfront (not guessed mid-draft)

AI tools that require excessive prompt engineering just to stay on-topic lose points here.

SEO workflows should reduce friction, not add it.

Step 3: I Evaluate Structure Before Content Quality

Before reading a single paragraph, I look at:

- Heading logic

- Section order

- Depth vs fluff

- Whether the outline actually matches ranking pages

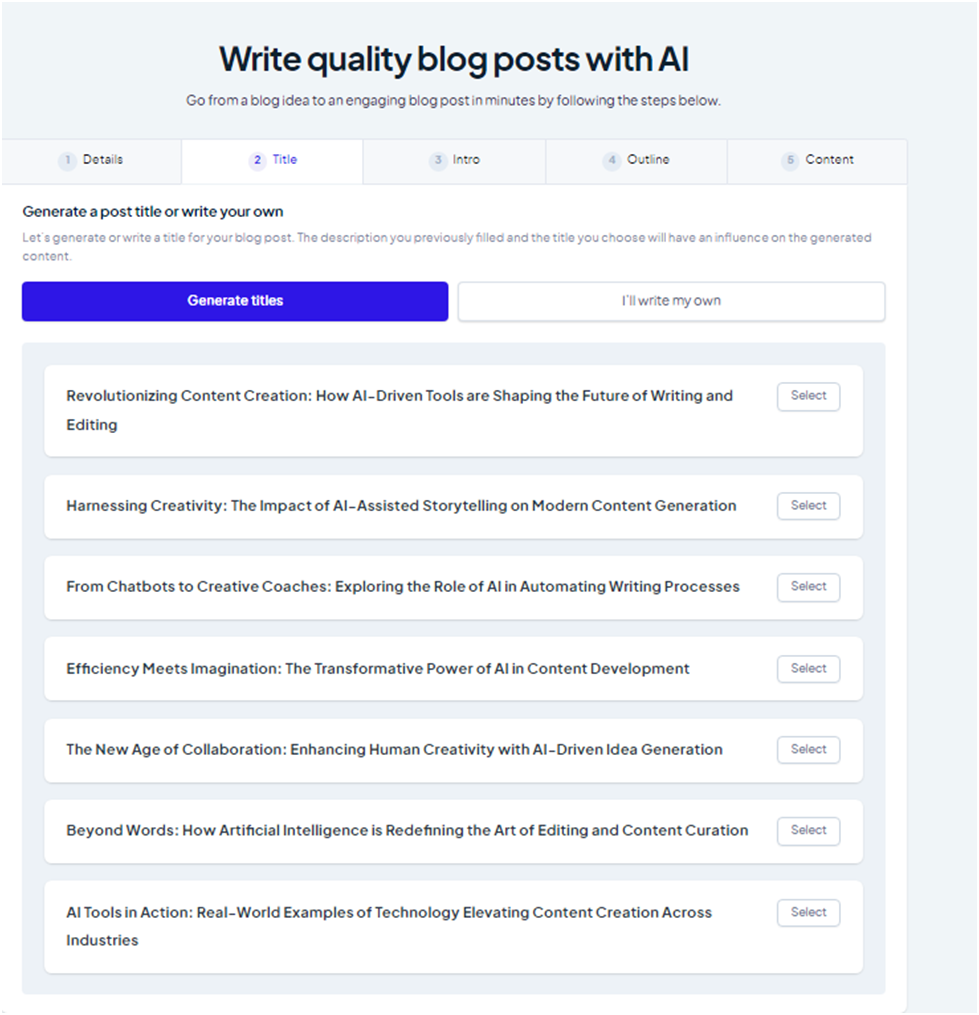

For example, when testing Copymatic, I paid close attention to its outline generation and SEO scoring features. The structure it produced was often SERP-aligned, but only after careful keyword selection.

That reinforced a key rule of this framework:

Good structure amplifies good keywords. It can’t rescue bad ones.

Step 4: I Check for Research Support and Factual Control

In 2026, factual accuracy is non-negotiable.

Some tools help here. Some don’t.

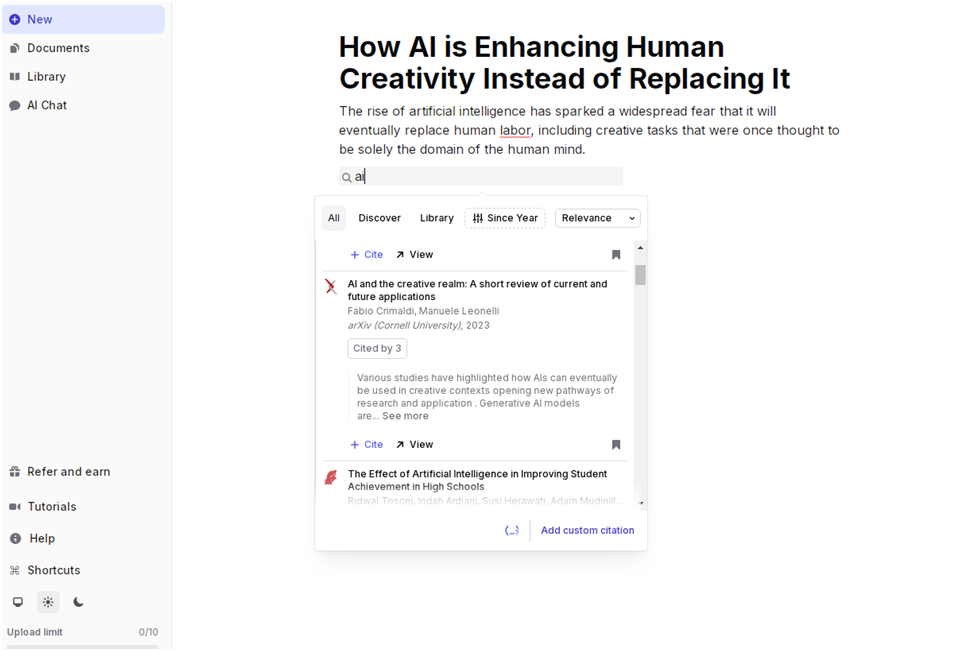

When testing Jenni AI, I specifically evaluated:

- citation suggestions

- fact attribution

- how easily sources could be reviewed or replaced

This matters because SEO content that looks polished but contains shaky claims tends to decay quickly, even if it ranks briefly.

Tools that support research reduce long-term risk, not just editing time.

Step 5: I Test Whether the Tool Preserves Author Voice

SEO content that ranks today rarely sounds generic.

To test this, I ask:

- Can the tool adapt to an existing writing style?

- Does it overwrite nuance?

- Can I easily insert opinion without fighting the draft?

This is where Hypertxt.ai stood out during testing. Its ability to adapt to an uploaded writing sample made the draft feel less “templated” and more editorial — which matters when building topical authority.

Voice control isn’t a cosmetic feature anymore. It’s part of trust.

Step 6: I Measure Edit Time, Not Output Length

Here’s the most important metric in my framework:

Could I publish this after less than 30 minutes of editing?

That includes:

- tightening intros

- adding examples

- light fact checks

- internal links

If a tool consistently requires heavy rewrites, restructuring, or tone correction, it doesn’t pass, no matter how impressive the feature list looks.

SEO tools should save decision time, not just typing time.

Step 7: I Look at Where the Tool Fits in a Real SEO Workflow

No AI tool works in isolation.

I evaluate:

- export options (Docs, WordPress, markdown)

- how easily drafts move into an SEO pipeline

- whether the tool supports iteration, not just generation

Tools that only shine during first drafts but fail during revision don’t scale well for SEO.

What This Framework Filters Out

Using this approach, several patterns become obvious:

- Tools that over-optimize without understanding intent

- Writers that generate volume but lack structure

- Platforms that promise rankings without strategy

- “All-in-one” tools that do many things poorly

SEO rewards clarity, not speed.

How This Framework Connects to My Tool Reviews

I apply this same framework whenever I review AI writing tools for SEO, especially in long-form comparisons where rankings are the goal, not just content output.

The detailed reviews focus on how each tool behaves inside this process:

- where it helps

- where it breaks

- and who it’s actually for

This article explains the testing logic.

The reviews show the results of applying it.

Final Thought

AI writing tools didn’t make SEO easier.

They made bad strategy obvious.

In 2026, the difference between ranking and disappearing isn’t which AI you use; it’s whether your workflow respects intent, structure, and editorial judgment.

Tools assist. Frameworks decide outcomes.

Which one is more important for your long-term content strategy, do you think?

Affiliate Disclosure: This article may contain affiliate links. If you make a purchase through these links, I may earn a small commission at no extra cost to you. This helps support the channel and allows me to keep creating valuable content. Thank you for your support!