Why AI Detectors Flag Human Writing as AI (False Positives Explained)

Why do AI detectors flag human writing as AI? Learn how false positives happen, which tools get it wrong, and how to interpret AI detection results in 2026.

If you’ve ever run your own writing through an AI detector and felt personally attacked by the results, you’re not alone.

In 2026, one of the biggest problems with AI detection is false positives, where clearly human-written content gets flagged as “likely AI.”

And no, this doesn’t mean your writing sounds like a robot.

It means AI detectors are doing exactly what they’re designed to do: pattern matching, not mind reading.

Let’s break down why this happens, where it happens most often, and what to do about it.

AI detectors don’t detect authorship; they detect patterns

This is the most important thing to understand. AI detectors do not know:

- who wrote a piece of content

- whether AI was used intentionally

- how many drafts or edits happened

They analyze statistical patterns such as:

- sentence predictability

- structural consistency

- transition frequency

- word distribution

If your writing looks too smooth, too consistent, or too optimized, detectors may assume it’s AI even when it isn’t.

This is why experienced writers, editors, academics, and marketers are more likely to be flagged than casual writers.

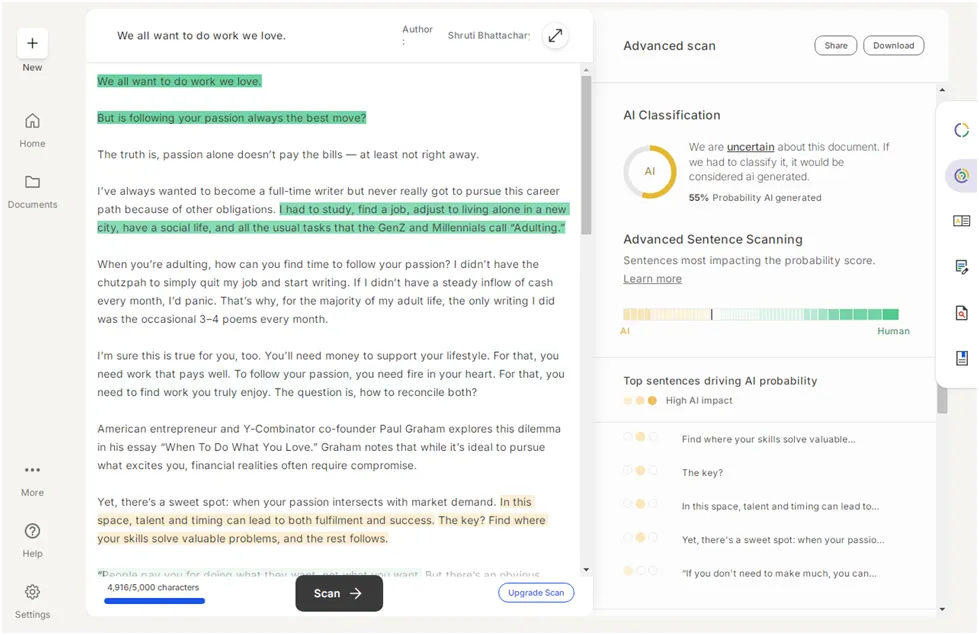

Example 1: GPTZero and “clean” professional writing

GPTZero is widely used in education and publishing, and it’s often accurate with raw AI output.

But it struggles with polished human writing.

In long-form articles that are:

- well structured

- clearly argued

- edited for flow

GPTZero frequently returns results like:

“Uncertain”

“Mixed authorship”

“Likely AI-assisted”

That doesn’t mean AI was used.

It means the writing lacks the messiness, inconsistency, and stylistic noise that detectors associate with “human.”

Ironically, good writing triggers suspicion.

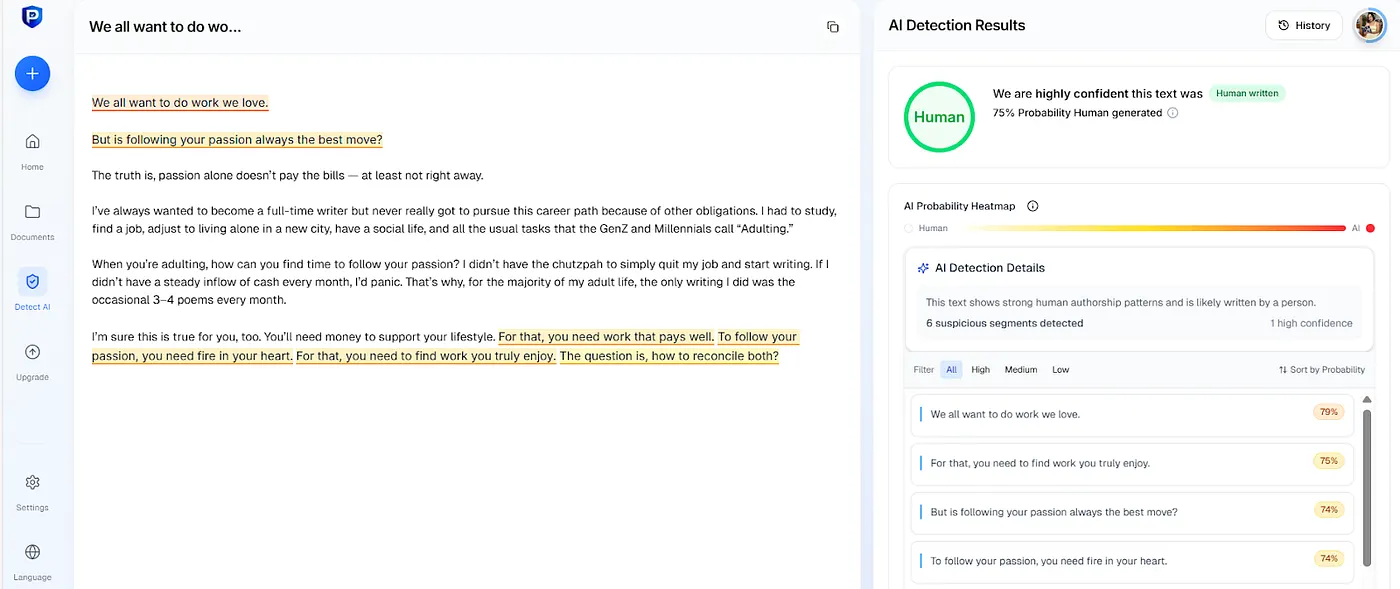

Example 2: Proofademic’s strict academic bias

Proofademic is designed for academic integrity, not creative nuance.

That’s intentional, and it prioritizes:

- strict sentence analysis

- conservative probability thresholds

- risk-averse scoring

As a result, fully human academic essays can still score: 60–80% “likely AI”

This is because academic writing is:

- formal

- standardized

- low-variation by design

To a detector trained on large datasets, that looks statistically similar to AI, especially when the writing is clear and well edited.

Proofademic isn’t “wrong.” It’s doing what it was built to do, but that makes it unsuitable for casual or professional writing judgments without human review.

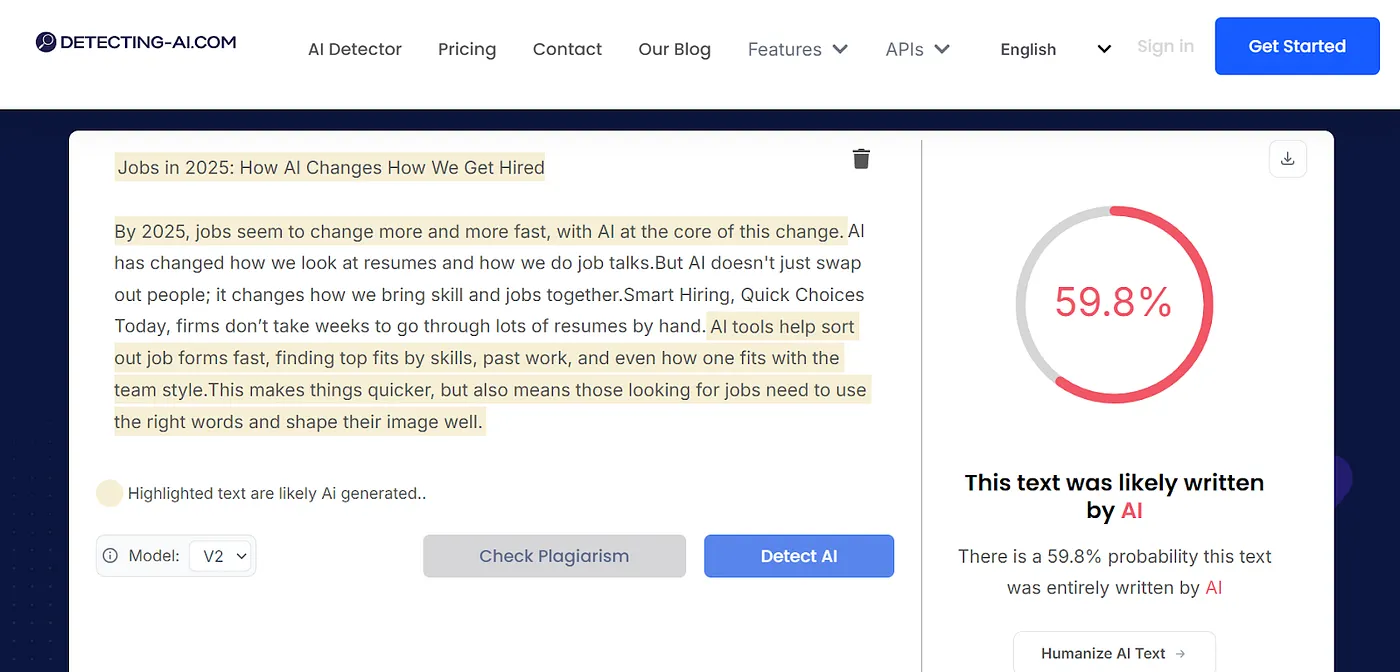

Example 3: Detecting-AI and edited content

Detecting-AI highlights another major cause of false positives: editing layers.

Here’s a common real-world workflow:

- Human writes first draft

- Grammarly or editor cleans it up

- Minor rephrasing for clarity

- Final polish

By the end, the text:

- has consistent pacing

- reduced variation

- fewer stylistic quirks

Detecting-AI (and many similar tools) often flags this as AI even when no generative model was used at all.

The detector isn’t seeing AI. It’s seeing optimization.

Why short-form content is flagged even more

False positives spike dramatically with:

- resumes

- bullet points

- bios

- short summaries

This is because short-form writing:

- uses repetitive verbs (“led,” “built,” “managed”)

- removes personality by necessity

- lacks narrative context

There simply isn’t enough data for detectors to analyze.

The result:

- human resumes flagged as AI

- AI-generated bullets passing as human

This is why detector scores on resumes should never be treated as proof.

The issue: “AI-assisted” is the default now

Most modern writing is neither fully human nor fully AI. It’s:

- AI for ideation

- human for judgment

- AI for cleanup

- human for final intent

Detectors that only offer binary labels (AI vs human) are fundamentally outdated.

This is why newer tools are moving toward:

- confidence bands

- mixed authorship categories

- reporting instead of verdicts

And why false positives will continue unless the industry shifts focus.

What to do when your human writing is flagged

If an AI detector flags your writing as AI:

- Don’t panic

- Check whether the text is short, heavily edited, or highly standardized

- Treat the score as a signal, not a verdict

- Use human review, writing history, and context for decisions

In high-stakes situations (hiring, grading, publishing), detector output alone is not sufficient evidence.

Final takeaway

AI detectors are not lie detectors.

They are probability engines trained on patterns, and patterns overlap more than most people realize.

False positives don’t mean you cheated. They mean your writing is clean, optimized, and modern.

And in 2026, that’s normal.

Further reading

If you want a full breakdown of which tools perform best (and where they fail), I’ve tested and compared 30+ AI detectors in 2026, including false positives, pricing, and real workflows.

Affiliate disclosure

The links in this article are affiliate links. If you choose to purchase through them, I may earn a small commission at no extra cost to you.

That said, every review, test, and comparison in this piece is based on my own hands-on use of the tools discussed. I test these products with real writing examples, note both strengths and limitations, and include tools only if they add genuine value. Compensation never influences rankings, recommendations, or conclusions.

I believe transparency matters — especially when writing about AI tools — and I’ll always prioritize accuracy and honesty over affiliate incentives.